SNN Application - Simple and Simple Hybrid

The Brain Inspired AI - Spiking Neural Networks

Imagine a computer that thinks a bit more like humans — not just crunching numbers, but “firing” tiny signals, or spikes, to process information. That's the idea behind Spiking Neural Networks (SNNs) which are known as the third generation learning algorithms. Unlike traditional models that constantly churn through data, SNNs communicate through bursts of activity, just like neurons in your brain. This unique feature makes SNNs incredibly efficient, fast, and energy-conscious—a dream come true for futuristic AI applications!

How SNNs Differ from Traditional Models

Most AI models we know, like ANNs and CNNs, work by continuously processing signals. They rely on a steady flow of data, much like a factory line that never stops. SNNs, however, are more like sprinters. They wait until there’s something meaningful to process and then fire in quick, impactful bursts. This approach not only conserves energy but also makes SNNs ideal for devices that need to think fast without draining power, like robots or wearable tech. This is one of the reasons as to why a complex biological machine (Such as our brain) can operate on as low as 20 mV.

Why should we care? SNN Applications.

SNNs aren’t just a science experiment—they’re powering the future! Here’s where they’re making waves:

Neuromorphic Hardware: Imagine brain-inspired chips that can “think” like we do—SNNs are a huge step in that direction.

Smart Robots: For robots that need to make real-time decisions in energy-limited situations, SNNs are a game-changer.

Assistive Devices: By mimicking natural neural firing, SNNs could help prosthetics communicate more naturally with the human body.

Pattern Recognition: SNNs are excellent at spotting patterns, making them ideal for tasks like facial recognition, anomaly detection, and more.

What this project brings to the table

This project dives into the capabilities of several shallow SNN models, each with a unique twist. It explores:

Simple Models: Basic Integrate-and-Fire (IF) and Leaky Integrate-and-Fire (LIF) networks can be tried individually (Shallow Network).

Hybrid Models: Special blends of IF and LIF that combine the best of both worlds can be tried individually (Shallow Network).

Using the well-known MNIST dataset (those handwritten numbers you’ve seen in countless AI demos!), we’re putting these models to the test. The goal? To see how well they recognize and classify digits while keeping things efficient.

How to use the app

Download any MNIST digit and save on your system as a png file. Choose a model and choose a random rotation range to test model's response. Upload the MNIST digit at assigned location. The digit will be resized to shape 28x28 pixels.

Models have been trained on MNIST dataset without augmenting the data. Model training process is available on request only. Please write to me if you need the training notebook. The final deployment files are available on GitHub link.

IF, LIF, IFLIF and LIFIF moedls are pretrained shallow SNN networks. None of these networks have Softmax output layers. Instead, they study the spiking patterns throughout the networks to identify the given category of input.

All 4 models have 2 layers each. IF model has 2 layers of "Integrate and Fire Neurons", LIF model has 2 layers of "Leaky Integrate and Fire Neurons", IFLIF model has IF layer followed by LIF Layer and LIFIF model has LIF layer followed by IF layer. The model parameters can be referred in the Github link on app.py.

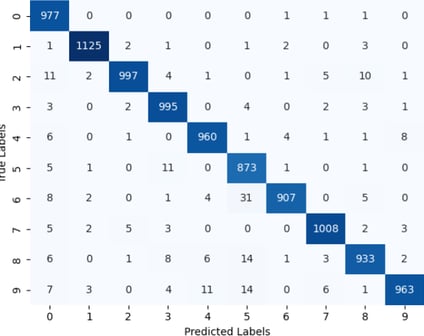

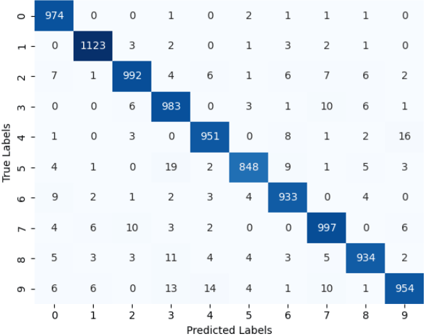

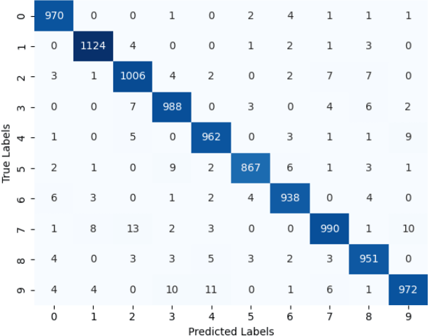

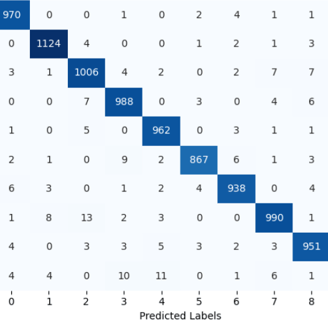

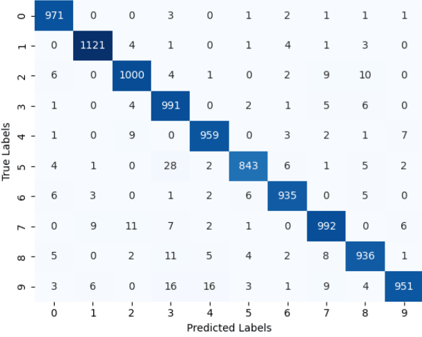

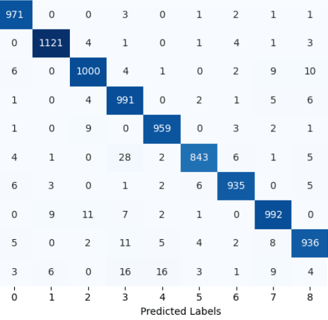

Each model has a confusion matrix associated to it that helps to understand the misclassification. Its a visual snapshot that shows where the model got things right and where it got… well, a bit confused.

These comparisons offer a hands-on view of how SNNs can be lightweight yet powerful—capable of delivering big results without the big energy bill.